Artificial intelligence has been (and continues to be) a popular topic of discussion in areas ranging from science fiction to cybersecurity. But as much fun as it might be to discuss my favorite sci-fi AI stories, let’s set aside the works of Asimov, Bradbury and other storytellers to focus on the role of AI in cybersecurity.

The basic concept behind deploying AI-based technologies in cybersecurity is they should be a force multiplier for hardworking and often overburdened security professionals. That’s especially true of analysts who work in security operations centers. SOC analysts, as we consistently read in the annual Devo SOC Performance Report™, are often overwhelmed by the ever-growing number of alerts that cross their screens each day. This alert fatigue and related pressures contribute to an industry-wide burnout problem.

Ideally, AI could play a critical role in helping SOC analysts keep pace with (and, we hope, stay ahead of) increasingly savvy and relentless malicious actors who are using AI effectively to achieve their nefarious objectives. Unfortunately, that doesn’t appear to be the case.

Survey Says?

Devo recently commissioned Wakefield Research to conduct a survey of IT security professionals to determine how they feel about AI. One result that stands out in the report is the perception of an ongoing battle between enterprise security teams and the cyberthreat actors who are using every means available to try to infiltrate and compromise all types of data. Here are a few key survey findings.

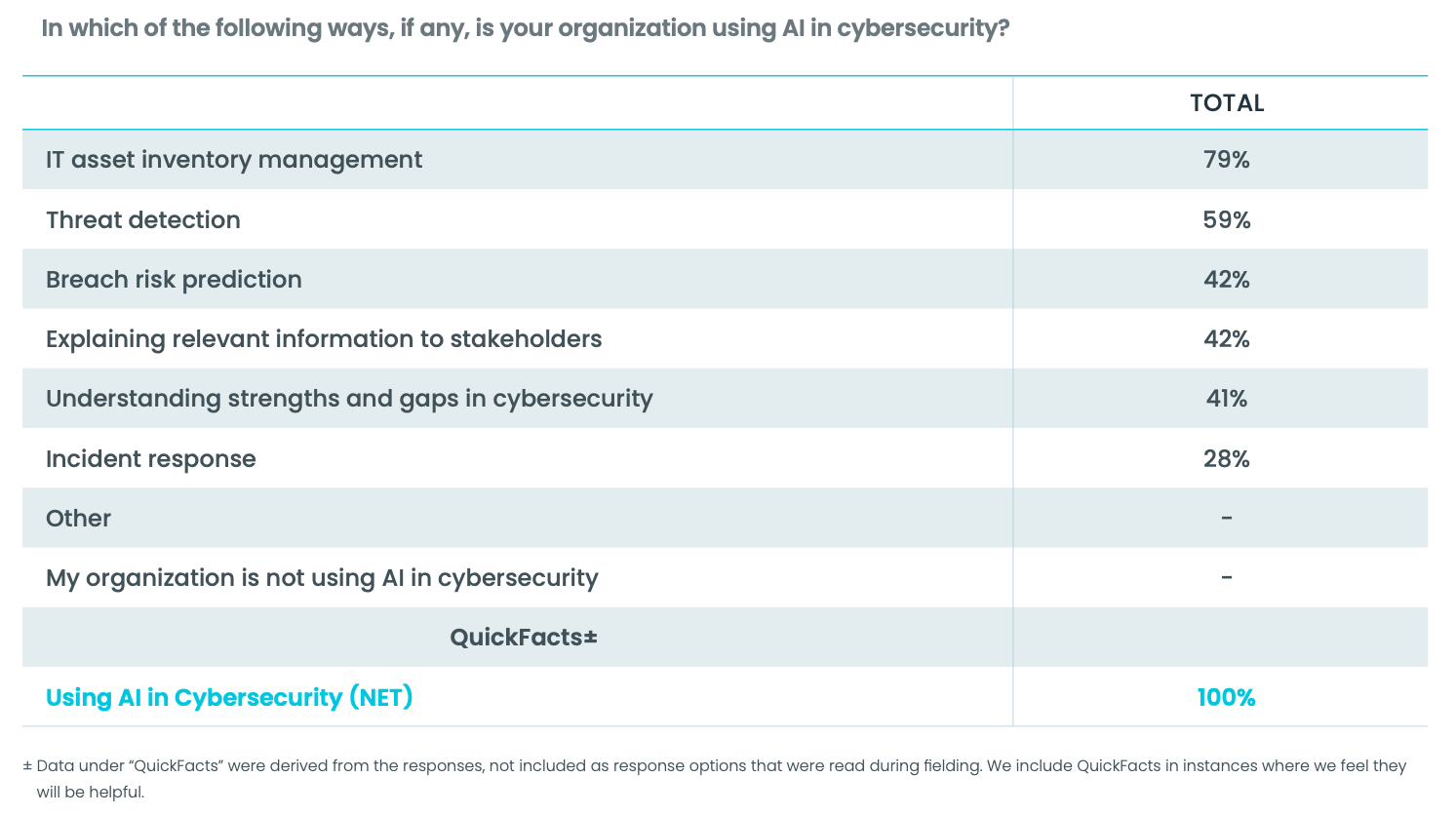

First, the good news. 100% of survey respondents said their organization is using AI in one or more areas. The top usage area is IT asset inventory management, followed by threat detection (which is encouraging to see), and breach risk prediction (ditto).

67% of respondents said their organization’s use of AI “barely scratches the surface.” While that response indicates the need for improvement, it does show that security professionals see value in the ability of AI to help them do their jobs more efficiently and effectively.

When someone uses the phrase “first the good news,” it telegraphs that some not-so-good news will follow. Without further ado, here are some less-positive survey findings.

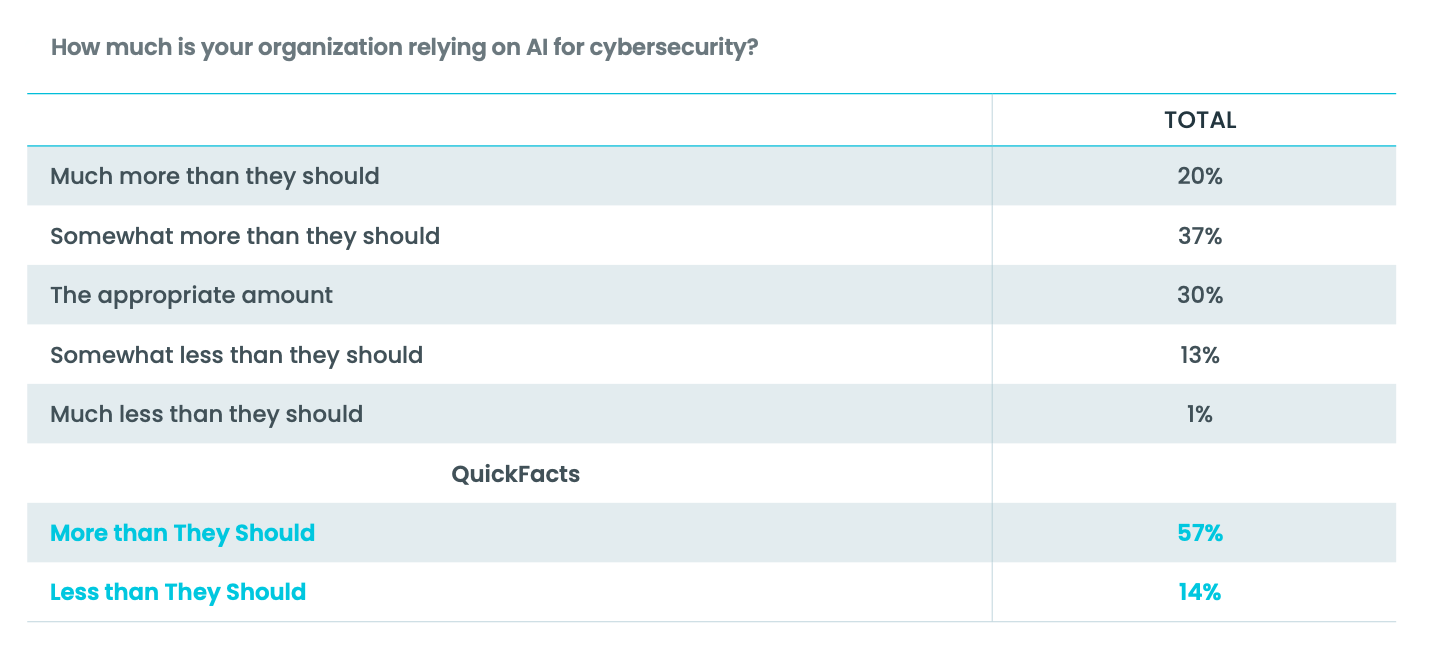

Let’s begin by examining the question of how respondents feel about their organization’s reliance on AI in their cybersecurity program.

More than half of respondents believe their organization is — at least currently — relying too much on AI. Almost one-third think the reliance on AI is appropriate, while a minority of respondents think their organization may be overdoing it with AI.

AI Causes Security Challenges

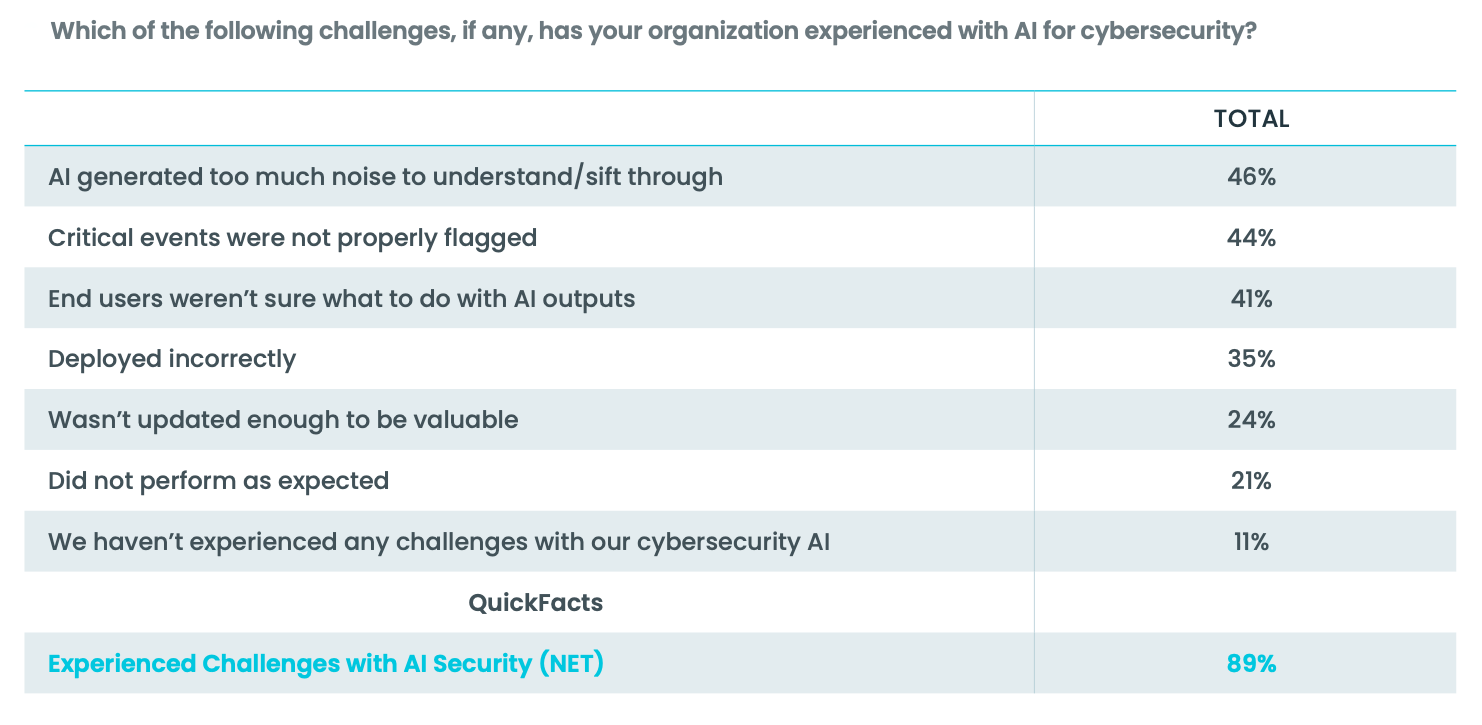

The perception among those surveyed that their organizations may be relying too much on AI is reflected in the response to the next question about the challenges it poses.

When almost 90% of those surveyed agree on something, it’s a pretty good indicator there’s fire accompanying the smoke. That seems to be the case when it comes to AI and cybersecurity.

When asked where in their organization’s security stack AI-related challenges occurred, core cybersecurity functions did not fare well. While IT asset inventory management was the top AI problem area according to 53% of respondents, three cybersecurity categories also received less-than-stellar responses:

- Threat detection (33%)

- Understanding cybersecurity strengths and gaps (24%)

- Breach risk prediction (23%)

It’s interesting to note that incident response was cited by far fewer respondents (13%) for posing AI-related challenges.

Where Do We Go From Here?

It seems clear that while AI already is being used in cybersecurity, the results are mixed. Our news release about the survey results calls it the “Big AI Lie”. That headline refers to the fact that not all AI is as “intelligent” as the name implies, and that’s even before accounting for mismatches in organizational needs and capabilities.

Cybersecurity is often hit by what’s commonly known as the “silver bullet” problem — in other words, looking for a fast and effective “magic” solution to challenges. AI is the latest technology to bear that stigma. That’s why organizations and security teams must be deliberate and results-driven as they evaluate and deploy AI solutions. Organizations must be sure to work with experienced experts in AI technology or they risk failure in a critical area with little to no margin for error.